The Web Audio API represents one of the most ambitious and controversial additions to the web platform. Designed to bring professional grade audio processing to browsers, it promised to enable everything from game audio engines to digital audio workstations (DAWs) running entirely in the browser. Nearly a decade after its initial release, the API has achieved widespread browser support and enabled impressive demonstrations. Yet beneath the surface lies a more complicated story: one of design compromises, unmet expectations, and fundamental tensions between different visions of what audio on the web should be.

This is not just another technical critique. The Web Audio API’s troubled history reveals important lessons about web standards development, the challenges of designing APIs by committee, and the sometimes painful gap between what audio professionals think developers need and what developers actually need.

What Is the Web Audio API?

The Web Audio API is a high-level JavaScript API for processing and synthesizing audio in web applications. Unlike the simple <audio> element designed for basic playback, the Web Audio API provides a sophisticated graph-based system for routing and processing audio.

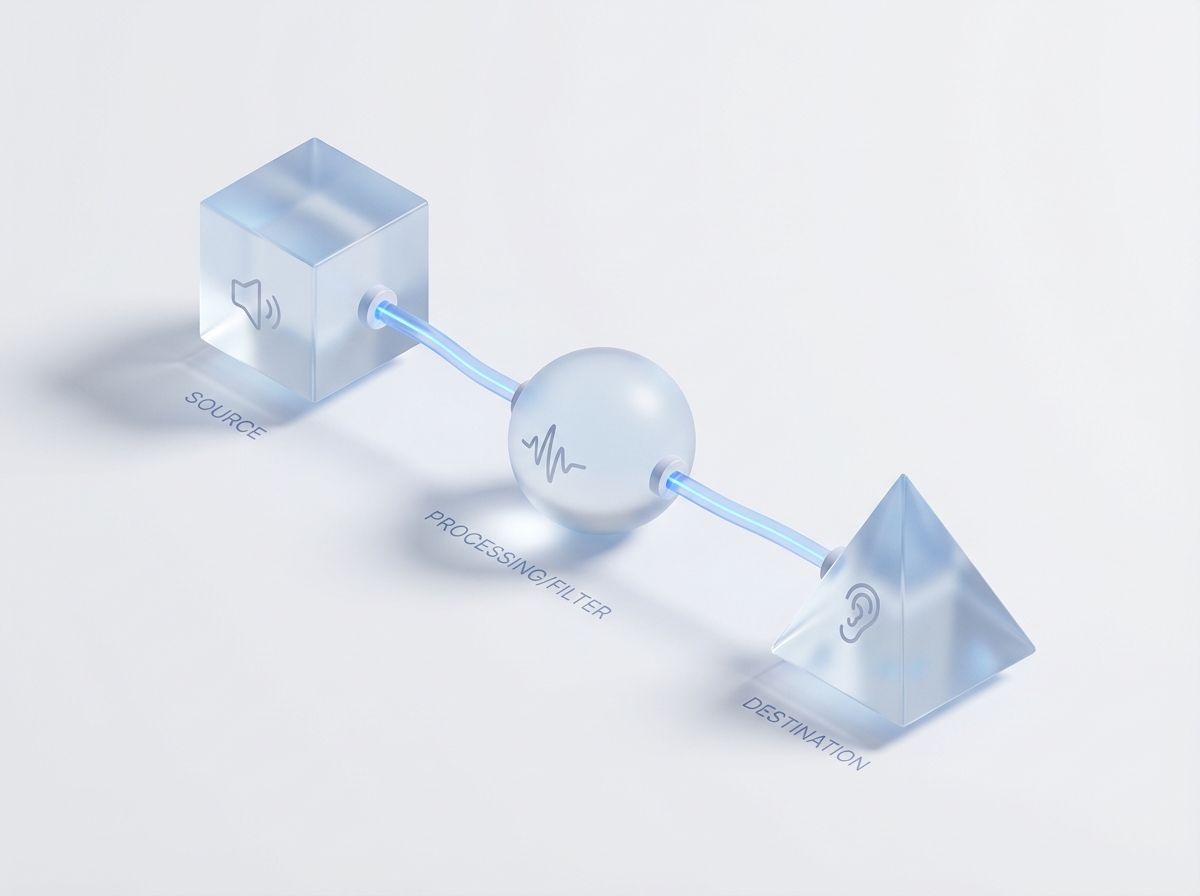

At its core, the API uses an audio routing graph made up of interconnected nodes:

- Source nodes (oscillators, audio buffers, media elements)

- Processing nodes (filters, compressors, reverb, analyzers)

- Destination nodes (speakers, recording outputs)

According to the specification itself, the API has lofty ambitions: “It is a goal of this specification to include the capabilities found in modern game audio engines as well as some of the mixing, processing, and filtering tasks that are found in modern desktop audio production applications.”

This ambitious scope would prove to be both the API’s greatest strength and its most significant weakness.

{{ responsive_image(src=“/images/blog/2025/10/web-audio-api-waveform.jpg”, alt=“Audio waveform visualization showing sound waves and frequency patterns”, caption=“The Web Audio API provides sophisticated audio processing capabilities through a node-based routing system”) }}

The Design Philosophy: Everything and the Kitchen Sink

The Web Audio API emerged from work by Chris Rogers at Google, based heavily on Apple’s Core Audio framework. The design philosophy was clear: provide a comprehensive set of built-in audio processing nodes that would cover most common use cases without requiring developers to write low-level audio processing code.

The API includes nodes for:

- DynamicsCompressorNode - Audio compression

- ConvolverNode - Reverb and spatial effects

- BiquadFilterNode - Various filter types

- WaveShaperNode - Distortion effects

- PannerNode - 3D spatial audio

- AnalyserNode - Frequency analysis for visualizations

The reasoning seemed sound: JavaScript was too slow for real-time audio processing, and garbage collection would cause audio glitches. By providing these effects as native browser implementations, developers could build sophisticated audio applications without worrying about performance.

But this approach raised an immediate question: Who is this API actually designed for?

{{ responsive_image(src=“/images/blog/2025/10/web-audio-api-development.jpg”, alt=“Web developer working on code with browser development tools open”, caption=“The Web Audio API’s design philosophy aimed to provide comprehensive audio processing without requiring low-level coding”) }}

The Identity Crisis: Who Needs This?

Jasper St. Pierre, in his incisive 2017 blog post “I don’t know who the Web Audio API is designed for,” articulated a fundamental problem: the API seems to fall between multiple stools.

Not for Game Developers

Game developers typically use established audio middleware like FMOD or Wwise. These systems provide:

- Precisely specified behavior across platforms

- Extensive plugin ecosystems

- Professional tooling and workflows

- Deterministic, well-documented effects

The Web Audio API’s built-in nodes, by contrast, are often underspecified. As St. Pierre notes: “Something like the DynamicsCompressorNode is practically a joke: basic features from a real compressor are basically missing, and the behavior that is there is underspecified such that I can’t even trust it to sound correct between browsers.”

With the advent of WebAssembly, game developers can now compile their existing FMOD or Wwise code to run in the browser. Why would they abandon their proven tools for an underspecified browser API?

Not for Audio Professionals

Professional audio applications require:

- Precise control over every parameter

- Extensive effect libraries and third-party plugins

- Sample-accurate timing

- Deterministic behavior for reproducible results

The Web Audio API’s canned effects don’t come close to meeting these needs. A professional wouldn’t use a browser’s built-in compressor when they could use industry-standard plugins with decades of refinement.

Not for Simple Use Cases Either

Perhaps most frustratingly, the API also fails developers with simple needs: those who just want to generate and play audio samples programmatically.

St. Pierre provides a telling example. Here’s what a simple, hypothetical audio API might look like for playing a 440Hz sine wave:

const frequency = 440;

const stream = window.audio.newStream(1, 44100);

stream.onfillsamples = function(samples) {

const startTime = stream.currentTime;

for (var i = 0; i < samples.length; i++) {

const t = startTime + (i / stream.sampleRate);

samples[i] = Math.sin(t * frequency) * 0x7FFF;

}

};

stream.play();Clean, simple, understandable. But the Web Audio API makes this surprisingly difficult.

The Performance Paradox

The Web Audio API’s approach to avoiding JavaScript performance problems created new performance problems of its own.

The ScriptProcessorNode Debacle

The original mechanism for custom audio processing was ScriptProcessorNode. It had several critical flaws:

-

No resampling support - The sample rate is global to the AudioContext and can’t be changed. If your hardware uses 48kHz but you want to generate 44.1kHz audio, you’re out of luck.

-

Main thread execution - Audio processing runs on the main thread, making glitches inevitable when the page is busy.

-

Deprecated before alternatives existed - ScriptProcessorNode was deprecated in 2014 in favor of “Audio Workers,” which were never implemented. They were then replaced by AudioWorklets, which took years to ship.

The BufferSourceNode Garbage Problem

The alternative approach using AudioBufferSourceNode has its own issues. To play continuous audio, you must:

- Create a new AudioBuffer for each chunk

- Create a new AudioBufferSourceNode for each chunk

- Schedule it to play at the right time

- Hope the garbage collector doesn’t cause glitches

As St. Pierre discovered: “Every 85 milliseconds we are allocating two new GC’d objects.” The documentation helpfully states that BufferSourceNodes are “cheap to create” and “will automatically be garbage-collected at an appropriate time.”

But as St. Pierre pointedly notes: “I know I’m fighting an uphill battle here, but a GC is not what we need during realtime audio playback.”

Floating Point Everything

Another performance issue: the API forces everything into Float32Arrays. While this provides precision, it’s slower than integer arithmetic for many operations. As St. Pierre observes: “16 bits is enough for everybody and for an output format it’s more than enough. Integer Arithmetic Units are very fast workers and there’s no huge reason to shun them out of the equation.”

{{ responsive_image(src=“/images/blog/2025/10/web-audio-api-performance.jpg”, alt=“Abstract visualization of performance metrics and optimization”, caption=“Performance paradoxes emerged from the API’s attempts to avoid JavaScript performance problems”) }}

The Road Not Taken: Mozilla’s Audio Data API

Robert O’Callahan, a Mozilla engineer who was deeply involved in the Web Audio standardization process, provides crucial historical context in his 2017 post “Some Opinions On The History Of Web Audio.”

Mozilla had proposed an alternative: the Audio Data API. It was much simpler:

setup()- Configure the audio streamcurrentSampleOffset()- Get current playback positionwriteAudio()- Write audio samples

This push-based API was straightforward, supported runtime resampling, and didn’t require breaking audio into garbage-collected buffers. It focused on the fundamental primitive: giving developers a way to generate and play audio samples.

Why Did Web Audio Win?

O’Callahan identifies several factors:

-

Performance concerns - The working group believed JavaScript was too slow for audio processing and GC would cause glitches. (Ironically, the Web Audio API’s own design introduced GC issues.)

-

Audio professional authority - “Audio professionals like Chris Rogers assured me they had identified a set of primitives that would suffice for most use cases. Since most of the Audio WG were audio professionals and I wasn’t, I didn’t have much defense against ‘audio professionals say…’ arguments.”

-

Lack of engagement - Apple’s participation declined after the initial proposal. Microsoft never engaged meaningfully. Mozilla was largely alone in pushing for changes.

-

Shipping before standardization - Google and Apple shipped Web Audio with a webkit prefix and evangelized it to developers. Once developers started using it, Mozilla had to implement it for compatibility.

O’Callahan reflects: “What could I have done better? I probably should have reduced the scope of my spec proposal… But I don’t think that, or anything else I can think of, would have changed the outcome.”

Modern Challenges: WebAssembly Integration

Fast forward to 2025, and WebAssembly has transformed what’s possible in browsers. Developers can now compile C++ audio processing code to run at near-native speeds. This should be the perfect complement to Web Audio, right?

Daniel Barta’s recent article “Web Audio + WebAssembly: Lessons Learned” reveals that integration remains problematic.

The Worker Problem

AudioContext cannot be used in Web Workers. This is a fundamental limitation that has been marked as an “urgent priority” for over eight years without resolution.

Since WebAssembly instances typically run in workers for performance reasons, this creates an architectural problem. You can’t have your WebAssembly audio processing code directly interact with the AudioContext.

No Shared Memory

The Web Audio API doesn’t support SharedArrayBuffer for data exchange. This has also been a documented, high-priority issue for over seven years.

Without shared memory, you must copy audio data between threads, introducing exactly the kind of inefficiency the API was supposed to avoid.

Incomplete Tooling

Emscripten provides helper methods for Web Audio, but as Barta discovered, “their implementation is incomplete.” The available methods were designed as basic helpers for testing, not production use.

Barta concludes: “A seamless experience seems within reach, and I am optimistic it will soon be realized. With these APIs and Chromium open for contributions, anyone—myself included—can actively participate in addressing these challenges.”

That optimism is admirable, but the fact that critical issues have remained unresolved for 7-8 years suggests systemic problems beyond just needing more contributors.

What Went Wrong? Lessons in API Design

The Web Audio API’s struggles illuminate several important principles:

1. Beware the “Everything API”

The API tried to be everything: a simple playback system, a game audio engine, and a professional audio workstation. This led to a bloated specification that serves none of these use cases particularly well.

Lesson: Focus on core primitives first. Let higher-level abstractions emerge from the community.

2. Don’t Assume You Know What Users Need

The working group assumed developers needed canned audio effects more than they needed simple, efficient sample playback. This assumption proved wrong.

Lesson: Talk to actual developers building real applications, not just audio professionals who understand the domain.

3. Shipping Beats Standardization

Google and Apple shipped Web Audio before the spec was finalized, forcing other browsers to implement it for compatibility. This locked in design decisions before they could be properly evaluated.

Lesson: The “ship it and see” approach can be valuable, but it can also entrench poor designs.

4. The Extensible Web Principle Came Too Late

Shortly after Web Audio was standardized, the “Extensible Web” philosophy became popular: provide low-level primitives and let developers build higher-level abstractions.

Web Audio is the antithesis of this approach. It provides high-level abstractions (DynamicsCompressorNode) without solid low-level primitives (efficient sample generation).

Lesson: Low-level primitives should come first. They’re harder to add later.

5. Authority Isn’t Always Right

The working group deferred to “audio professionals” who assured them the API would meet developer needs. Those professionals were wrong about what web developers actually needed.

Lesson: Domain expertise is valuable, but it’s not a substitute for user research and iterative design.

{{ responsive_image(src=“/images/blog/2025/10/web-audio-api-design-lessons.jpg”, alt=“Software architecture diagram showing API design patterns and principles”, caption=“The Web Audio API’s challenges offer important lessons in API design and web standards development”) }}

Current State: Adoption and Usage

Despite its flaws, the Web Audio API has achieved significant adoption:

- Universal browser support - All major browsers now implement the API

- Impressive demonstrations - Developers have built synthesizers, DAWs, games, and visualizations

- Active ecosystem - Libraries like Tone.js provide higher-level abstractions

However, usage patterns suggest most applications use a small subset of the API:

- Simple playback with AudioBufferSourceNode

- Basic visualization with AnalyserNode

- Occasional use of GainNode for volume control

The sophisticated graph routing and built-in effects that drove the API’s design are used far less frequently. Most complex audio processing happens in WebAssembly, not through Web Audio nodes.

The Path Forward

What would it take to fix the Web Audio API? Several improvements are needed:

Short Term

- Implement AudioWorklet everywhere - This provides efficient, worker-based audio processing

- Add SharedArrayBuffer support - Enable zero-copy data sharing

- Support AudioContext in workers - Remove the artificial limitation

Long Term

- Provide a simple sample playback API - Something like Mozilla’s original Audio Data API

- Better specify existing nodes - Make behavior consistent across browsers

- Embrace WebAssembly - Design for integration with compiled audio code

The Realistic Outlook

The fact that critical issues have remained “urgent priorities” for 7-8 years suggests these fixes may never arrive. The Web Audio API may be locked into its current design indefinitely.

For developers, this means:

- Use WebAssembly for complex processing - Don’t rely on built-in nodes

- Keep it simple - Use the minimal subset of the API you need

- Expect inconsistencies - Test across browsers

- Consider alternatives - For some use cases, the

<audio>element may be sufficient

Conclusion: A Cautionary Tale

The Web Audio API is a cautionary tale about the challenges of designing web standards. It shows what happens when:

- Ambitious goals override practical needs

- Authority substitutes for user research

- Shipping precedes standardization

- High-level abstractions come before low-level primitives

Yet it’s also a testament to the web platform’s resilience. Despite its flaws, developers have built remarkable things with the Web Audio API. Libraries have emerged to paper over its rough edges. WebAssembly provides an escape hatch for performance-critical code.

The API’s greatest legacy may not be the features it provides, but the lessons it teaches about web standards development. Future API designers would do well to study both its ambitions and its failures.

As Jasper St. Pierre concluded his critique: “Can the ridiculous overeagerness of Web Audio be reversed? Can we bring back a simple ‘play audio’ API and bring back the performance gains once we see what happens in the wild? I don’t know… But I would really, really like to see it happen.”

Seven years later, we’re still waiting.

Further Reading

- I don’t know who the Web Audio API is designed for - Jasper St. Pierre

- Some Opinions On The History Of Web Audio - Robert O’Callahan

- Web Audio + WebAssembly: Lessons Learned - Daniel Barta

- Web Audio API Specification - W3C

- MDN Web Audio API Documentation - Mozilla

What are your experiences with the Web Audio API? Have you encountered the issues discussed here, or found creative workarounds? Share your thoughts in the comments below.

Was this helpful?

Have questions about this article?

Ask can help explain concepts, provide context, or point you to related content.