There’s something beautifully ironic happening on the web right now. Hundreds of websites have implemented a new standard called /llms.txt—a carefully crafted markdown file designed to help AI systems understand their content. Developers have built tools to generate these files. Community directories catalog implementations. SEO platforms flag sites that don’t have one.

There’s just one problem: not a single major AI platform actually uses it.

No, really. Not OpenAI. Not Google. Not Anthropic. Not Meta. The very systems that /llms.txt was designed to serve don’t even check if the file exists. Server logs confirm it: when AI crawlers visit your website, they sail right past your lovingly crafted llms.txt file without a second glance.

This isn’t just a story about a failed web standard. It’s a revealing case study in the power dynamics of the AI era, the challenges of grassroots standardization, and the growing tension between publishers and the platforms that increasingly control how their content reaches users. The /llms.txt saga tells us something important about who holds power in the AI/web ecosystem—and it’s not the people creating content.

What is /llms.txt?

Photo by Michael Burrows on Pexels

Photo by Michael Burrows on Pexels

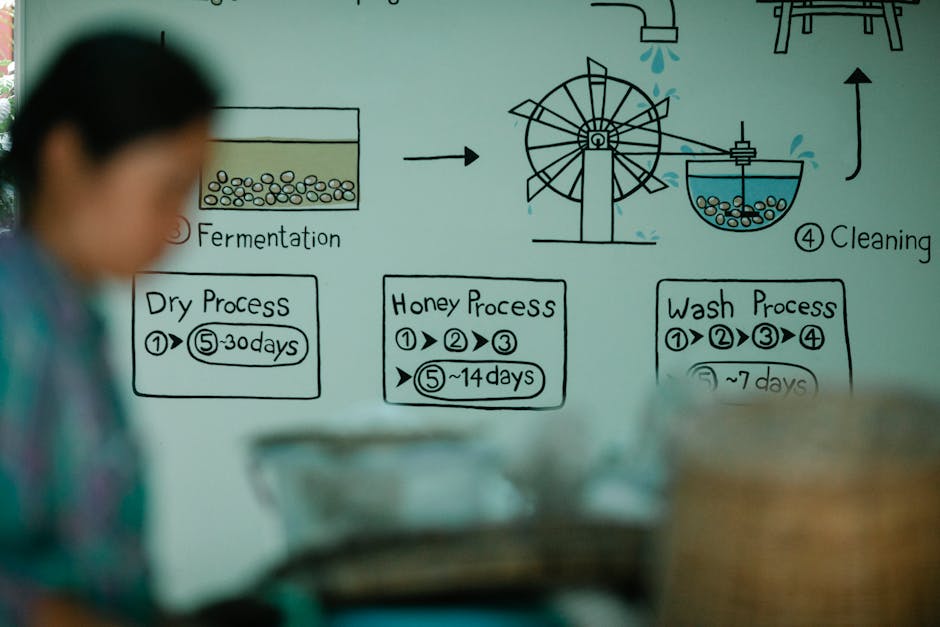

On September 3, 2024, Jeremy Howard—co-founder of fast.ai and creator of the popular nbdev framework—published a proposal for a new web standard. The idea was elegantly simple: websites would create a markdown file at /llms.txt that provides AI systems with a curated, structured overview of their content.

The problem Howard identified was real. “Large language models increasingly rely on website information,” he wrote, “but face a critical limitation: context windows are too small to handle most websites in their entirety.” Converting complex HTML pages—with their navigation menus, advertisements, JavaScript, and formatting—into clean, LLM-friendly text is both difficult and imprecise.

The /llms.txt solution follows a specific structure:

- An H1 heading with the project or site name (the only required element)

- A blockquote containing a concise summary

- Optional detailed information about the project

- H2-delimited sections containing markdown lists of links to key resources

- An optional “Optional” section for secondary content that can be skipped

Here’s a simplified example from the FastHTML project:

# FastHTML

> FastHTML is a python library which brings together Starlette, Uvicorn,

> HTMX, and fastcore's `FT` "FastTags" into a library for creating

> server-rendered hypermedia applications.

## Docs

- [FastHTML quick start](https://fastht.ml/docs/tutorials/quickstart_for_web_devs.html.md):

A brief overview of many FastHTML features

- [HTMX reference](https://github.com/bigskysoftware/htmx/blob/master/www/content/reference.md):

Brief description of all HTMX attributes

## Optional

- [Starlette full documentation](https://example.com/starlette-sml.md):

A subset of the Starlette documentation useful for FastHTML developmentThe proposal also suggested that individual pages offer markdown versions by appending .md to their URLs—so example.com/docs/guide.html would also be available at example.com/docs/guide.html.md.

The Technical Elegance

From a design perspective, /llms.txt is actually quite clever. It follows the established pattern of /robots.txt and /sitemap.xml—simple text files in the root directory that help automated systems understand websites. The choice of markdown as the format is inspired: it’s human-readable, machine-parseable, and already familiar to developers.

The standard strikes a nice balance between structure and flexibility. The required elements ensure consistency, while the open-ended sections allow sites to organize information in ways that make sense for their specific content. The “Optional” section is particularly thoughtful—it acknowledges that LLMs with different context window sizes might need different amounts of information.

An ecosystem quickly emerged around the standard. The Python package llms_txt2ctx provides both a CLI tool and library for parsing llms.txt files and generating LLM-ready context. JavaScript implementations appeared. WordPress plugins and Drupal modules made implementation trivial for non-technical users. Community directories like llmstxt.site and directory.llmstxt.cloud began cataloging implementations.

The proposal even inspired creative extensions. Some projects generate “llms-full.txt” files containing the complete text of all linked documents, creating a single massive file that LLMs with large context windows could consume in one go. Guillaume Laforge, a developer advocate, demonstrated feeding his entire blog (682,000 tokens!) to Google’s Gemini using this approach, enabling sophisticated queries across his complete writing history.

The Adoption Reality Check

Photo by David Pupăză on Unsplash

Photo by David Pupăză on Unsplash

Here’s where the story takes a turn. Despite the technical elegance, the growing ecosystem, and hundreds of implementations, /llms.txt faces a fundamental problem: the platforms it was designed for aren’t using it.

In July 2025, nearly a year after the proposal’s launch, Ahrefs published a blunt analysis: “no major LLM provider currently supports llms.txt. Not OpenAI. Not Anthropic. Not Google.” This wasn’t speculation—it was based on server log analysis showing that AI crawlers simply don’t request llms.txt files when they visit websites.

Google’s John Mueller, a Search Relations team member, was even more direct: “none of the AI services have said they’re using LLMs.TXT (and you can tell when you look at your server logs that they don’t even check for it).” He compared the protocol to the keywords meta tag—a once-popular HTML element that search engines eventually ignored because it was too easily manipulated.

The irony deepens when you look at who’s implementing llms.txt. Anthropic, the company behind Claude, publishes its own llms.txt file. But Anthropic doesn’t state that its crawlers actually use the standard when visiting other sites. It’s the equivalent of putting up a sign in your window while ignoring everyone else’s signs.

This has created a strange situation where SEO tools are recommending something that provides no demonstrated benefit. Semrush began flagging missing llms.txt files as site issues, prompting frustrated discussions in marketing forums. “Why should I incentivize people to get everything they need from an AI response and NOT visit their website?” one marketer asked, capturing the deeper tension.

Ryan Law, Director of Content Marketing at Ahrefs, put it succinctly: “llms.txt is a proposed standard. I could also propose a standard (let’s call it please-send-me-traffic-robot-overlords.txt), but unless the major LLM providers agree to use it, it’s pretty meaningless.”

Why Platforms Aren’t Adopting It

The non-adoption of /llms.txt isn’t random—it reflects fundamental misalignments in incentives and power.

The Traffic Paradox: AI platforms face a basic conflict. Publishers want AI systems to send users to their websites. But platforms like Google, OpenAI, and Anthropic increasingly want to answer questions directly, keeping users within their own interfaces. Google’s AI Overviews, for instance, have reduced organic clicks by 34.5% according to recent studies. Why would these platforms adopt a standard that makes it easier to send users away?

Existing Alternatives: From the platforms’ perspective, they already have tools for understanding websites. Sitemaps list all pages. Structured data markup (Schema.org) provides semantic information. robots.txt indicates crawling preferences. The platforms have sophisticated systems for extracting and understanding content from HTML. They don’t necessarily need publishers to create special markdown files.

Control vs. Cooperation: The /llms.txt proposal assumes a cooperative model where publishers and platforms work together. But the current AI/web ecosystem is increasingly adversarial. According to HUMAN Security, 80% of companies now actively block AI crawlers. Publishers feel their content is being used without fair compensation. Platforms feel entitled to crawl public web content. A voluntary standard requires trust that simply doesn’t exist.

The Standardization Chicken-and-Egg: For /llms.txt to succeed, it needs critical mass. But publishers won’t invest in creating comprehensive llms.txt files if platforms don’t use them. And platforms won’t build support for a standard that few sites implement. Without a forcing function—like regulatory requirements or industry consortium agreements—this deadlock persists.

Brett Tabke, CEO of Pubcon and WebmasterWorld, argued that the whole thing is redundant: “XML sitemaps and robots.txt already serve this purpose.” From a platform perspective, he might be right.

What This Tells Us About the AI/Web Ecosystem

The /llms.txt story reveals deeper truths about how AI is reshaping the web.

Power Asymmetry: The most obvious lesson is about power. Publishers can propose standards, build tools, and implement files on their servers. But if platforms choose not to participate, none of it matters. This is fundamentally different from earlier web standards like RSS or microformats, which succeeded because they provided value to publishers independent of platform adoption. You could use RSS to syndicate your content whether or not Google Reader existed.

The Illusion of Control: Many publishers are implementing /llms.txt because it feels like taking control in an uncertain landscape. “Everyone’s scrambling in a dark room where nothing’s clearly visible,” one SEO practitioner wrote. Creating an llms.txt file is concrete, actionable, and follows best practices. But it’s ultimately performative—a ritual that provides psychological comfort without functional benefit.

Grassroots vs. Platform-Driven Standards: The web has a history of both grassroots standards (like markdown itself) and platform-driven standards (like AMP). The successful grassroots standards typically solved problems for creators independent of platform adoption. The /llms.txt proposal, despite its grassroots origins, requires platform cooperation to function. It’s a grassroots standard with a platform-dependent value proposition—perhaps an inherently unstable combination.

The Broader Context: This is happening against a backdrop of increasing tension between publishers and AI platforms. AI search visitors convert at 4.4 times higher rates than traditional organic visitors, making AI traffic valuable. But AI Overviews and chatbot answers are reducing the traffic publishers receive. Meanwhile, platforms face their own challenges—Google’s AI Overviews have significant spam problems, and the quality of AI-generated answers remains inconsistent.

The /llms.txt saga is a microcosm of these larger conflicts. Publishers want standards that give them agency. Platforms want flexibility to optimize their systems. Users want accurate, helpful answers. These interests don’t naturally align.

Future Scenarios: Where Does This Go?

Photo by MICHAEL CHIARA on Unsplash

Photo by MICHAEL CHIARA on Unsplash

Looking ahead, several scenarios seem possible:

Scenario 1: Permanent Niche Status

The most likely outcome is that /llms.txt remains a niche practice among developer-focused sites and AI enthusiasts. It becomes a signal of technical sophistication rather than a functional standard—similar to how some sites still maintain RSS feeds even though RSS usage has declined. There’s no harm in this, but also limited benefit.

Scenario 2: Regulatory or Consortium-Driven Adoption

If regulations emerge requiring AI platforms to respect publisher preferences, /llms.txt could become part of the compliance framework. Alternatively, an industry consortium (perhaps involving publishers, platforms, and civil society groups) could negotiate standards for AI/web interaction, with llms.txt as one component. This would require significant external pressure.

Scenario 3: Evolution into Something Else

The core ideas behind /llms.txt—structured, curated content for AI systems—might evolve into different implementations. Perhaps platforms develop their own submission systems (like Google Search Console but for AI). Or maybe the approach merges with existing standards like structured data markup. The specific llms.txt format might fade, but the underlying need persists.

Scenario 4: Unexpected Platform Adoption

It’s possible that a major platform could adopt /llms.txt as a differentiator. A new AI search engine trying to compete with Google might embrace it as a way to build publisher goodwill. Or an existing platform might adopt it in response to competitive pressure or regulatory scrutiny. This seems unlikely but not impossible.

What Would Need to Change? For meaningful adoption, we’d need:

- Clear value proposition for platforms (not just publishers)

- Incentive alignment or regulatory requirements

- Demonstration of superior results compared to existing methods

- Critical mass of high-quality implementations

- Platform commitment to transparency about usage

None of these seem imminent.

What Should You Actually Do?

If you’re a publisher or developer wondering whether to implement /llms.txt, here’s a practical framework:

Don’t implement it if:

- You’re doing it solely for SEO benefit (there is none currently)

- You’re hoping it will increase AI-driven traffic (it won’t)

- You’re resource-constrained and need to prioritize

Consider implementing it if:

- You’re in the developer tools or technical documentation space where early adopters might manually use it

- You want to signal technical sophistication to your audience

- You’re already creating markdown documentation and it’s trivial to add

- You’re experimenting with AI-assisted documentation systems

- You want to be prepared if adoption happens later

Focus instead on:

- Creating high-quality, well-structured content

- Using existing standards properly (sitemaps, structured data)

- Optimizing for how AI systems actually work today

- Building direct relationships with your audience

- Diversifying traffic sources beyond search and AI

The harsh reality is that AI optimization remains more art than science. Aleyda Solis, a respected SEO expert, released comprehensive AI search optimization guidelines that focus on content structure, crawlability, and quality—fundamentals that matter regardless of specific standards.

The Value of the Attempt

Despite its current limitations, the /llms.txt proposal isn’t worthless. It represents an important attempt to establish norms for AI/web interaction. It sparked conversations about publisher agency, platform responsibility, and the future of web standards. It demonstrated what a cooperative approach could look like, even if cooperation isn’t currently happening.

Jeremy Howard’s proposal also highlighted a real problem: the web wasn’t designed for AI consumption, and AI systems weren’t designed for the web’s complexity. That tension won’t resolve itself. We need standards, protocols, and norms for this new era. The /llms.txt approach might not be the answer, but asking the question was valuable.

There’s also something admirable about the attempt to solve problems through open standards rather than proprietary systems. In an era of increasing platform consolidation, grassroots standardization efforts matter—even when they fail. They remind us that the web’s architecture isn’t predetermined, that alternatives exist, and that communities can propose different futures.

Conclusion: Lessons from a Standard in Limbo

The /llms.txt story is still being written, but its current chapter offers clear lessons. Technical elegance doesn’t guarantee adoption. Grassroots enthusiasm can’t overcome platform indifference. Standards that require cooperation struggle in adversarial environments. Power matters more than good ideas.

But perhaps the most important lesson is about the changing nature of the web itself. The era when publishers and platforms had aligned interests—when helping search engines understand your content meant more traffic—is ending. The AI age introduces new dynamics where platforms can extract value from content without sending users to sources. In this environment, voluntary standards face steep challenges.

For now, /llms.txt exists in a strange limbo: implemented but unused, promoted but ineffective, elegant but irrelevant. It’s a monument to good intentions in an ecosystem increasingly defined by conflicting interests.

Whether it eventually succeeds, evolves into something else, or fades into obscurity, the /llms.txt experiment will remain a fascinating case study in the challenges of standardization in the AI era. It shows us both the possibilities of cooperative approaches and the harsh realities of power asymmetries.

The web has always been shaped by the tension between openness and control, cooperation and competition, idealism and pragmatism. The /llms.txt standard embodies all these tensions. Its fate will tell us something important about which forces prevail in the AI age.

For now, the elegant solution sits unused, waiting for a problem that the powerful have chosen not to solve.

Further Reading:

Was this helpful?

Have questions about this article?

Ask can help explain concepts, provide context, or point you to related content.